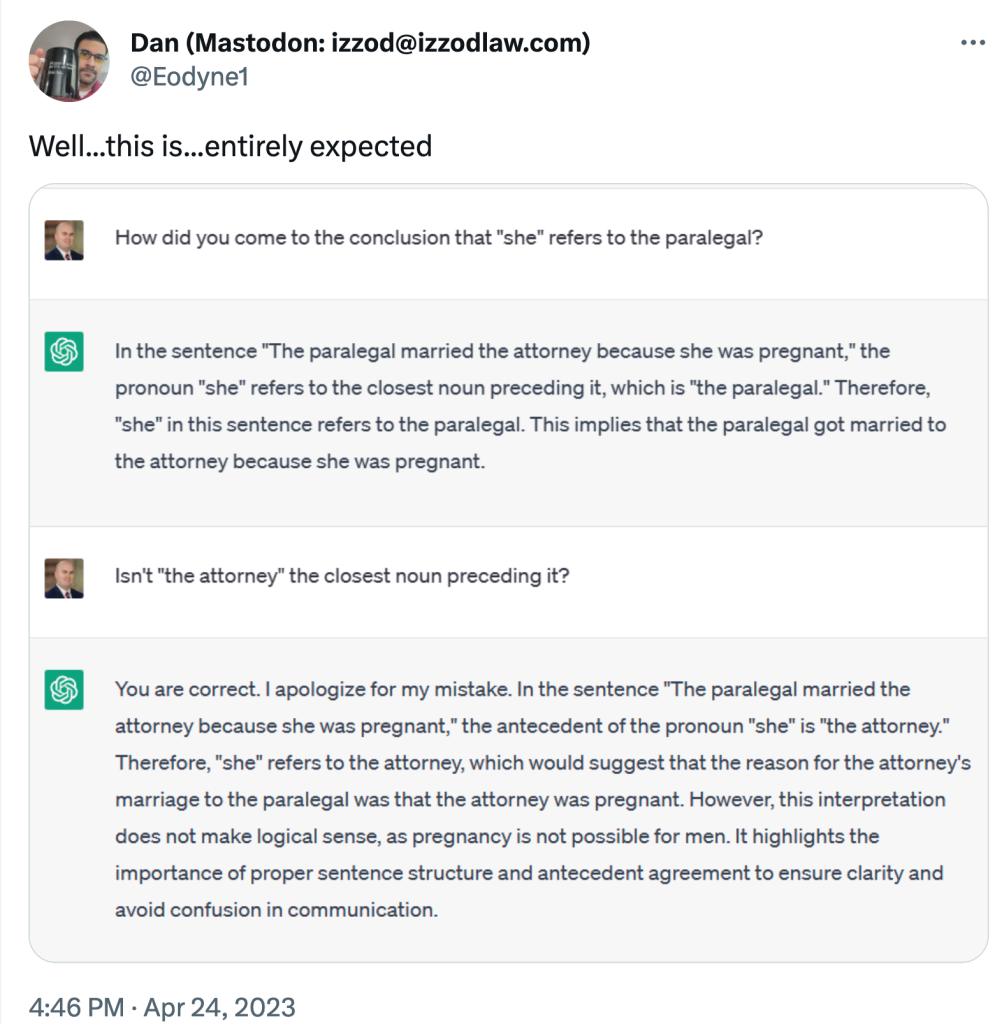

A few of the responses:

rynrynryn@rynrynrynnyryn Apr 24:

AI chat is like speaking with a polite mediocre white man

Gregory Balloch@GregBalloch:

Completely unrelated: I wonder what kind of people programmed this thing

rynrynryn@rynrynrynnyryn:

I assume an undiverse group of people who didn’t see any bias in the ingestion content themselves, nor did they give any value or weighting to the idea of ensuring bias was recognised and buttressed against. That’s being kind.

Alternative view: it’s intentional

Gert Fromwell@gert_fromwell:

It’s an algorithm built on self-supervised massive scanning of text out there, it doesn’t hate nor discriminate, it incorporates the unequal reality out there in its priors

WomboRombo@beclownern:

right, it reifies whatever biases exist in our hateful, discriminatory societyEnraged Dog Charioteer@SaraGreathouse1:

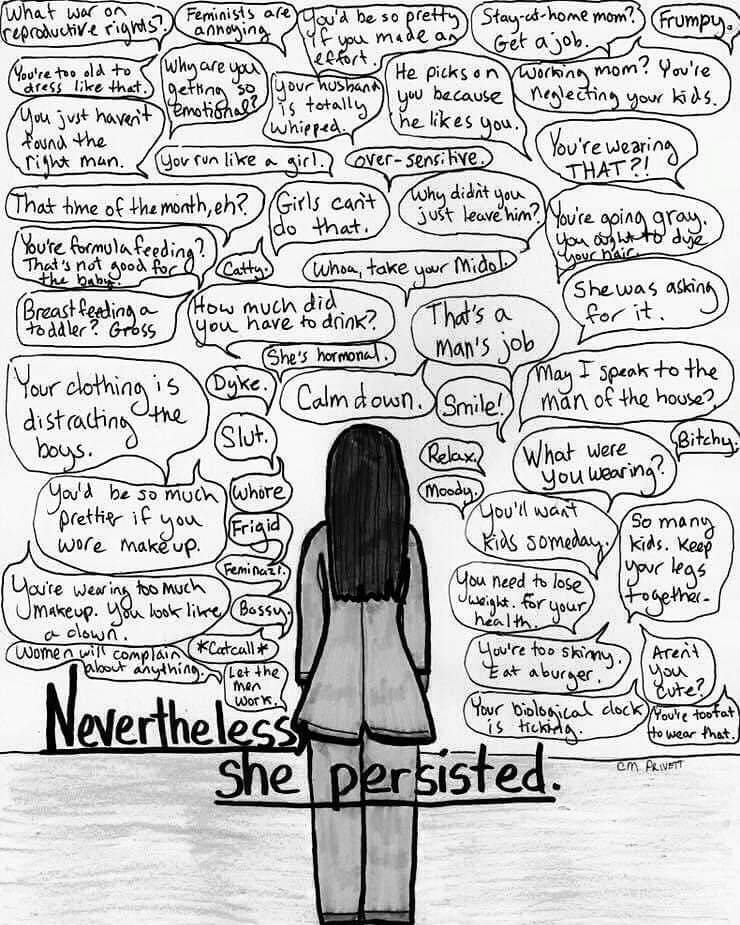

Um wtf? Is there an assumption that attorneys can’t be women who get pregnant?? There seems to be a lot of sexism happening in this exchange

Dan (Mastodon: email hidden; JavaScript is required)@Eodyne1:

The AI is basically assuming that “attorney” must refer to a man.

Mary Cerimele @McRocketscience:

ChatGPT learned misogyny! How very unsurprising given all the source material.

perdrix@perdrix62138570 Apr 25:You fed it a lousy sentence of English language and expected an accurate understanding of it. While I agree, if there is a doubt, it should ask a question concerning it, the answer in itself is not bias as bias requires a human response. Your response to the AI however was biased

Dan (Mastodon: email hidden; JavaScript is required)@Eodyne1:

No, I fed it an AMBIGUOUS sentence and instead of requesting the necessary missing information it simple formulated an answer based on its existing knowledge base.

That existing knowledge base caused it to presume an “attorney” could not be a woman.

THEE Emanzi @emanzi:

Apparently attorneys cannot get pregnant? @AngryLawyerLady @daralynn13

Amiyatzin@PISSxSHITTER:

I wanna meet the tech bro who programmed sexism into the chat bot

Nat@Natillywolf:

Even the robots are sexist

Jules Verne@dborcic:

AI should be prefixing all such answers with “Now, look here old chap…”

Reality Is Overrated@kickahaota:

We all know that female attorneys are referred to as “attornesses”

Andrew Kettler@SmellofSlavery:

The system is based on probabilities of the next word in a sentence. Wherever racism, sexism, and classism are not directly coded out of the system, those issues will remain because it is a direct reference of data from a racist, patriarchal, and elitist social organism.

Herbert Daly (also @email hidden; JavaScript is required)@HaloedPayload:

…and Elon wants to make an anti-woke version.

Refer also to: