Tweet of Winter 2024:

“UnAlbertan” CougstaX@cougsta:

Our democracy is under threat by a bunch of fake Christians who

don’t believe in reality. Imma gonna need more chocolate foods.

![]() imma gonna need more chocolate foods too.

imma gonna need more chocolate foods too.![]()

Commissioners 2-1 vote to ratify motions from Feb. 6 emergency meeting by Mike Jones, Feb 16, Observer-Reporter

Washington County sent nearly $350,000 in cryptocurrency to a Russian hacker last week in order to end the cyberattack that crippled the county’s government and courthouse.

County solicitor Gary Sweat read a lengthy statement during Thursday’s board of commissioners meeting in which he detailed aspects of the cyberattack and why officials thought they needed to hold an emergency meeting on Feb. 6 to pay the ransom.

“Foreign cybercriminals were able to seize control of the county’s network, basically paralyzing all of the county’s operations,” Sweat said. “The attack was unprecedented. I think it’s safe to say no one at this table has ever encountered or experienced such a cyber incident.”

Sweat said the county first detected the cyberattack on Jan.19 and that it eventually “evolved into a ransomware attack” on Jan. 24 that caused major issues for its network and computers. The county’s information technology department worked closely with federal investigators and third-party cyber experts in an attempt to combat the hackers and contain the malware from spreading while trying to understand the scope of attack.

One of the digital forensic consultants, Sylint, confirmed to county officials on Feb. 5 that the hackers had “large amounts of data” from the county’s network that could be “injurious to the county and its residents” if released on the dark web, Sweat said. With a deadline to pay the ransom looming, Sweat said county officials gathered for a videoconference later that night to weigh their options. In addition to the sensitive data the hacker sobtained, county officials worried it could take three to four months to rebuild their data in the network if they didn’t pay the ransom.

Sweat said he advised the commissioners on Feb. 6 to hold an emergency meeting after the hackers gave them a 3:30 p.m. deadline that day to pay the ransom. The commissioners voted 2-1 to authorize payment of up to$400,000 to DigitalMint of Chicago, a firm that specializes in selling cryptocurrency, to settle the cyberattack and help the county restore its computer server.

Sweat said within that payment was the ransom of $346,687,which was sent to the hackers in exchange for a “digital encryption key” to unlock the system with the understanding that no private information would be shared on the dark web. DigitalMint was also paid a fee of $19,313 for its work to facilitate the transfer.

Sweat said Thursday that the federal investigators urged officials not to make public statements because the “entire county campus was considered a crime scene” at the time and the hackers were monitoring media reports of the situation. He said most of the systems are now functioning normally again and they’re taking various steps to better protect the county’s computer network.

“While paying the ransom was not the county’s first choice, we decided that after weighing all factors, it was the best approach,” Sweat said.

The commissioners decided to vote again Thursday to ratify the motions from the Feb. 6 emergency meeting to confirm their legitimacy in case any questions arose over the Sunshine Law, although Sweat said he believed there was a “clear and present danger to life and property” that necessitated the last-minute meeting.

Commissioner Larry Maggi, who voted against the motions at the emergency meeting and did so again Thursday, said he understood why the county decided to pay the ransom, but that it was still “disconcerting” to him.

“I just have a lot of concerns with this,” Maggi said. “I just find this repugnant that we’re giving in to cyber criminals from another country. … We’re living in fear of criminals from Russia. The whole thing stinks (and) that’s why I’m voting no on this.”

Commission Chairman Nick Sherman said he agreed with Maggi’s assessment, but he didn’t think they had a choice in the matter, especially since sensitive information had been compromised, including some involving children who are supervised by the courts. Sherman added that he’s confident the hackers will “go away” now that the ransom has been paid.![]() I am sure paying them will make the criminals bolder and hit the county for more crypto bucks next time. In my view, crypto currency is crime-enabling and anyone worshipping it, like Canada’s Robocol conman Pierre Picklehead, his Fucker Truckers and their Klan, are dirty. Never mind the noise, pollution and horrific waste of electricity crypto causes

I am sure paying them will make the criminals bolder and hit the county for more crypto bucks next time. In my view, crypto currency is crime-enabling and anyone worshipping it, like Canada’s Robocol conman Pierre Picklehead, his Fucker Truckers and their Klan, are dirty. Never mind the noise, pollution and horrific waste of electricity crypto causes![]()

“Nobody wanted to pay this. This isn’t something where you wake up in the morning and are excited to pay a cyber ransom,” Sherman said.“This is a very dangerous situation, and Washington County is a victim.”

Sherman and Commissioner Electra Janis then voted to ratify the two motions while Maggi voted against them, just as they had at last week’s emergency meeting.

Alberta power generator fined for operating without regulatory approval, Investigators found Avex had not applied for a permit to build the plant by The Canadian Press, Feb 12, 2024, Calgary Herald

![]() Regulators in Alberta are hypocrites and liars. They didn’t penalize Encana/Ovintiv’s many law violations, including illegally frac’ing and contaminating a community’s drinking water supplies (much more serious a crime than this one by Avex); operating and selling a sour facility as sweet and not even notifying the impacted neighbours or volunteer fire department; fraudulent noise studies and fraudulent baseline waterwell testing; not completing the mandatory noise assessments for endless noisy ugly polluting frac compressors; etc. Instead, EUB (which contained the AUC portion at the time) fraudulently changed Encana’s unlawful noise levels to claim they were compliant and punished me by defaming me as a criminal, then, terrorist, refusing me energy regulation, and engaging in more fraud to enable Encana’s many other crimes. Bunch of slippery cons, in more ways than one.

Regulators in Alberta are hypocrites and liars. They didn’t penalize Encana/Ovintiv’s many law violations, including illegally frac’ing and contaminating a community’s drinking water supplies (much more serious a crime than this one by Avex); operating and selling a sour facility as sweet and not even notifying the impacted neighbours or volunteer fire department; fraudulent noise studies and fraudulent baseline waterwell testing; not completing the mandatory noise assessments for endless noisy ugly polluting frac compressors; etc. Instead, EUB (which contained the AUC portion at the time) fraudulently changed Encana’s unlawful noise levels to claim they were compliant and punished me by defaming me as a criminal, then, terrorist, refusing me energy regulation, and engaging in more fraud to enable Encana’s many other crimes. Bunch of slippery cons, in more ways than one.![]()

An Alberta power generator has been fined for running a plant for months without regulatory approval.

The Alberta Utilities Commission has fined Avex Energy nearly a quarter-million dollars for running a natural gas-fired generator while bypassing regulatory tests for safe and unobtrusive operation.![]() While allowing Encana/Ovintiv/Lynx to be as invasive and law violating as it wants, and engaging in fraud to cover for the company.

While allowing Encana/Ovintiv/Lynx to be as invasive and law violating as it wants, and engaging in fraud to cover for the company.![]()

“They have not been approved to operate,” said commission spokesman Geoff Scotton.![]() Ya, and Encana had no licence to operate a sour facility at Rockyford, a neighbouring community, but regulators let the company get away with it.

Ya, and Encana had no licence to operate a sour facility at Rockyford, a neighbouring community, but regulators let the company get away with it.![]()

According to an agreed statement of facts, officials from what was then Avila Energy approached the commission with plans to build a generating station in the County of Stettler in the summer of 2019. Avila already held permits for operating a natural gas field in that area of central Alberta and planned to use that gas to fuel the plant.![]() A frac’d gas field?

A frac’d gas field?![]()

“On the basis of those discussions, Avila believed that no additional approval was required and proceeded on that basis,” the statement says.

The generator was built and fired up on April 23, 2021.

The electricity, eventually reaching 3.5 megawatts, was sold to a bitcoin miner.

Avila, which eventually turned into Avex Energy, planned to generate up to 10 megawatts.

By December, the commission began to receive noise complaints from residents, some as far as nearly three kilometres away.

“The complainants stated that they first noticed the noise in May 2021 and that the noise became increasingly problematic in October 2021, when the additional generating capacity was added,” the agreed statement of facts says.![]() Lynx, which took over Encana’s frac crap at Rosebud, still violates my legal right to quiet enjoyment of my home and land. Regulator engaged in fraud to help Encana violate my legal rights, and then violated my charter rights trying to terrify me quiet. Sleaze bag polluter-enabling bullies, including liar ex supreme court of Canada judge Rosalie Abella.

Lynx, which took over Encana’s frac crap at Rosebud, still violates my legal right to quiet enjoyment of my home and land. Regulator engaged in fraud to help Encana violate my legal rights, and then violated my charter rights trying to terrify me quiet. Sleaze bag polluter-enabling bullies, including liar ex supreme court of Canada judge Rosalie Abella.![]()

The utilities commission investigated the noise complaints and found Avex was unlicensed.

Investigators found Avex had not applied for a permit to build the plant. The company had not conducted a noise assessment as required, nor did it receive required environmental approvals.

The Red Willow power plant was shut Dec. 22, 2021. It remains closed.

Avex was “co-operative, forthright and responsive,” during the investigation, the commission said in a summary of the settlement.![]() Encana/Ovintiv was nothing but unlawful, unco-operative, belligerent and mean, yet EUB did nothing but further harm me and protect the company. Law-violating Fuckers.

Encana/Ovintiv was nothing but unlawful, unco-operative, belligerent and mean, yet EUB did nothing but further harm me and protect the company. Law-violating Fuckers.![]()

The total fine was $241,477. It was reduced 30 per cent because of the company’s response to the investigation.

Scotton says such infractions are unusual but do occasionally occur.

“From time to time these situations are brought to our attention.”

![]() Understatement of the century.

Understatement of the century.![]()

Some of the comments:

R Schipper:

The power was sold to bit coin miner? Even if the generator was properly licensed this waste of energy should not be allowed.

Jim Blackstock:

Out of interest, does that oil refinery that had been operating without an approval to operate for 22 years and ‘still’ given a nod to continue by the regulator despite the lack of approval . . . . Does it have its approval yet?

***

Pausing AI Developments Isn’t Enough. We Need to Shut it All Down by Eliezer Yudkowsky, March 29, 2023

Yudkowsky is a decision theorist from the U.S. and leads research at the Machine Intelligence Research Institute. He’s been working on aligning Artificial General Intelligence since 2001 and is widely regarded as a founder of the field.

An open letter published today calls for “all AI labs to immediately pause for at least 6 months the training of AI systems more powerful than GPT-4.”

This 6-month moratorium would be better than no moratorium. I have respect for everyone who stepped up and signed it. It’s an improvement on the margin.

I refrained from signing because I think the letter is understating the seriousness of the situation and asking for too little to solve it.

The key issue is not “human-competitive” intelligence (as the open letter puts it); it’s what happens after AI gets to smarter-than-human intelligence. Key thresholds there may not be obvious, we definitely can’t calculate in advance what happens when, and it currently seems imaginable that a research lab would cross critical lines without noticing.

Many researchers steeped in these issues, including myself, expect that the most likely result of building a superhumanly smart AI, under anything remotely like the current circumstances, is that literally everyone on Earth will die.![]() Taking likely all other species with us.

Taking likely all other species with us.![]() Not as in “maybe possibly some remote chance,” but as in “that is the obvious thing that would happen.” It’s not that you can’t, in principle, survive creating something much smarter than you; it’s that it would require precision and preparation and new scientific insights

Not as in “maybe possibly some remote chance,” but as in “that is the obvious thing that would happen.” It’s not that you can’t, in principle, survive creating something much smarter than you; it’s that it would require precision and preparation and new scientific insights![]() Pffft. With human laziness and greed-fed propaganda growing ever deeper hatred of science, will humanity allow new scientific insights? Doubtful.

Pffft. With human laziness and greed-fed propaganda growing ever deeper hatred of science, will humanity allow new scientific insights? Doubtful.![]() , and probably not having AI systems composed of giant inscrutable arrays of fractional numbers.

, and probably not having AI systems composed of giant inscrutable arrays of fractional numbers.

Without that precision and preparation, the most likely outcome is AI that does not do what we want, and does not care for us nor for sentient life in general. That kind of caring is something that could in principle be imbued into an AI but we are not ready and do not currently know how.

Absent that caring, we get “the AI does not love you, nor does it hate you, and you are made of atoms it can use for something else.”

The likely result of humanity facing down an opposed superhuman intelligence is a total loss. Valid metaphors include “a 10-year-old trying to play chess against Stockfish 15”, “the 11th century trying to fight the 21st century,” and “Australopithecus trying to fight Homo sapiens“.

To visualize a hostile superhuman AI, don’t imagine a lifeless book-smart thinker dwelling inside the internet and sending ill-intentioned emails. Visualize an entire alien civilization, thinking at millions of times human speeds, initially confined to computers—in a world of creatures that are, from its perspective, very stupid and very slow. A sufficiently intelligent AI won’t stay confined to computers for long. In today’s world you can email DNA strings to laboratories that will produce proteins on demand, allowing an AI initially confined to the internet to build artificial life forms or bootstrap straight to postbiological molecular manufacturing.

If somebody builds a too-powerful AI, under present conditions, I expect that every single member of the human species and all biological life on Earth dies shortly thereafter.

There’s no proposed plan for how we could do any such thing and survive. OpenAI’s openly declared intention is to make some future AI do our AI alignment homework. Just hearing that this is the plan ought to be enough to get any sensible person to panic. The other leading AI lab, DeepMind, has no plan at all.

An aside: None of this danger depends on whether or not AIs are or can be conscious; it’s intrinsic to the notion of powerful cognitive systems that optimize hard and calculate outputs that meet sufficiently complicated outcome criteria. With that said, I’d be remiss in my moral duties as a human if I didn’t also mention that we have no idea how to determine whether AI systems are aware of themselves—since we have no idea how to decode anything that goes on in the giant inscrutable arrays—and therefore we may at some point inadvertently create digital minds which are truly conscious and ought to have rights and shouldn’t be owned.

The rule that most people aware of these issues would have endorsed 50 years earlier, was that if an AI system can speak fluently and says it’s self-aware and demands human rights, that ought to be a hard stop on people just casually owning that AI and using it past that point. We already blew past that old line in the sand. And that was probably correct; I agree that current AIs are probably just imitating talk of self-awareness from their training data. But I mark that, with how little insight we have into these systems’ internals, we do not actually know.

If that’s our state of ignorance for GPT-4, and GPT-5 is the same size of giant capability step as from GPT-3 to GPT-4, I think we’ll no longer be able to justifiably say “probably not self-aware” if we let people make GPT-5s. It’ll just be “I don’t know; nobody knows.” If you can’t be sure whether you’re creating a self-aware AI, this is alarming not just because of the moral implications of the “self-aware” part, but because being unsure means you have no idea what you are doing and that is dangerous and you should stop.

![]()

Frac’ers need to stop too. Notably in water scarce jurisdictions like Alberta where communities are running out of water, or already have, while “regulators” let frac’ers keep injecting and intentionally permanently losing billions of gallons of water to the hydrogeological cycle. Beyond stupid, beyond evil.

![]()

On Feb. 7, Satya Nadella, CEO of Microsoft, publicly gloated that the new Bing would make Google “come out and show that they can dance.” “I want people to know that we made them dance,” he said.

This is not how the CEO of Microsoft talks in a sane world. It shows an overwhelming gap between how seriously we are taking the problem, and how seriously we needed to take the problem starting 30 years ago.

We are not going to bridge that gap in six months.

It took more than 60 years between when the notion of Artificial Intelligence was first proposed and studied, and for us to reach today’s capabilities. Solving safety of superhuman intelligence—not perfect safety, safety in the sense of “not killing literally everyone”—could very reasonably take at least half that long. And the thing about trying this with superhuman intelligence is that if you get that wrong on the first try, you do not get to learn from your mistakes, because you are dead. Humanity does not learn from the mistake and dust itself off and try again, as in other challenges we’ve overcome in our history, because we are all gone.

Trying to get anything right on the first really critical try is an extraordinary ask, in science and in engineering. We are not coming in with anything like the approach that would be required to do it successfully. If we held anything in the nascent field of Artificial General Intelligence to the lesser standards of engineering rigor that apply to a bridge meant to carry a couple of thousand cars, the entire field would be shut down tomorrow.

We are not prepared. We are not on course to be prepared in any reasonable time window. There is no plan. Progress in AI capabilities is running vastly, vastly ahead of progress in AI alignment or even progress in understanding what the hell is going on inside those systems. If we actually do this, we are all going to die.

Many researchers working on these systems think that we’re plunging toward a catastrophe, with more of them daring to say it in private than in public; but they think that they can’t unilaterally stop the forward plunge, that others will go on even if they personally quit their jobs. And so they all think they might as well keep going. This is a stupid state of affairs, and an undignified way for Earth to die, and the rest of humanity ought to step in at this point and help the industry solve its collective action problem.

Some of my friends have recently reported to me that when people outside the AI industry hear about extinction risk from Artificial General Intelligence for the first time, their reaction is “maybe we should not build AGI, then.”

Hearing this gave me a tiny flash of hope, because it’s a simpler, more sensible, and frankly saner reaction than I’ve been hearing over the last 20 years of trying to get anyone in the industry to take things seriously. Anyone talking that sanely deserves to hear how bad the situation actually is, and not be told that a six-month moratorium is going to fix it.

On March 16, my partner sent me this email. (She later gave me permission to excerpt it here.)

“Nina lost a tooth! In the usual way that children do, not out of carelessness! Seeing GPT4 blow away those standardized tests on the same day that Nina hit a childhood milestone brought an emotional surge that swept me off my feet for a minute. It’s all going too fast. I worry that sharing this will heighten your own grief, but I’d rather be known to you than for each of us to suffer alone.”

When the insider conversation is about the grief of seeing your daughter lose her first tooth, and thinking she’s not going to get a chance to grow up, I believe we are past the point of playing political chess about a six-month moratorium.

![]() That grief – which I feel every day for parents (and other species) globally – even when I was a child observing humanity’s hell everywhere, is the main reason I chose not to have kids. But my grief stems mainly from the horrific suffering ahead because of humans gleefully destroying earth’s livability. Kids globally will suffer much worse than Palestinian kids brutalized by Netanyahu and his murderous racist Zionist Klan because of the pollution our species refuses to reduce. AI is creating much worse suffering, never mind that its visuals are gross and unimaginative.

That grief – which I feel every day for parents (and other species) globally – even when I was a child observing humanity’s hell everywhere, is the main reason I chose not to have kids. But my grief stems mainly from the horrific suffering ahead because of humans gleefully destroying earth’s livability. Kids globally will suffer much worse than Palestinian kids brutalized by Netanyahu and his murderous racist Zionist Klan because of the pollution our species refuses to reduce. AI is creating much worse suffering, never mind that its visuals are gross and unimaginative.![]()

If there was a plan for Earth to survive, if only we passed a six-month moratorium, I would back that plan. There isn’t any such plan.

Here’s what would actually need to be done:

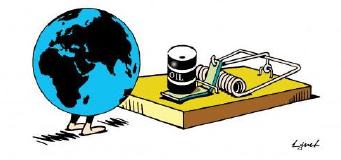

The moratorium on new large training runs needs to be indefinite and worldwide. There can be no exceptions, including for governments or militaries.![]() Trouble is, billionaires will never go along with it; their egos are too big and they are too greedy for more billions, more power, more control.

Trouble is, billionaires will never go along with it; their egos are too big and they are too greedy for more billions, more power, more control.![]() If the policy starts with the U.S., then China needs to see that the U.S. is not seeking an advantage but rather trying to prevent a horrifically dangerous technology which can have no true owner and which will kill everyone in the U.S. and in China and on Earth. If I had infinite freedom to write laws, I might carve out a single exception for AIs being trained solely to solve problems in biology and biotechnology, not trained on text from the internet, and not to the level where they start talking or planning; but if that was remotely complicating the issue I would immediately jettison that proposal and say to just shut it all down.

If the policy starts with the U.S., then China needs to see that the U.S. is not seeking an advantage but rather trying to prevent a horrifically dangerous technology which can have no true owner and which will kill everyone in the U.S. and in China and on Earth. If I had infinite freedom to write laws, I might carve out a single exception for AIs being trained solely to solve problems in biology and biotechnology, not trained on text from the internet, and not to the level where they start talking or planning; but if that was remotely complicating the issue I would immediately jettison that proposal and say to just shut it all down.

Shut down all the large GPU clusters (the large computer farms where the most powerful AIs are refined). Shut down all the large training runs. Put a ceiling on how much computing power anyone is allowed to use in training an AI system, and move it downward over the coming years to compensate for more efficient training algorithms. No exceptions for governments and militaries. Make immediate multinational agreements to prevent the prohibited activities from moving elsewhere. Track all GPUs sold. If intelligence says that a country outside the agreement is building a GPU cluster, be less scared of a shooting conflict between nations than of the moratorium being violated; be willing to destroy a rogue datacenter by airstrike.

Frame nothing as a conflict between national interests, have it clear that anyone talking of arms races is a fool. That we all live or die as one, in this, is not a policy but a fact of nature. Make it explicit in international diplomacy that preventing AI extinction scenarios is considered a priority above preventing a full nuclear exchange, and that allied nuclear countries are willing to run some risk of nuclear exchange if that’s what it takes to reduce the risk of large AI training runs.

That’s the kind of policy change that would cause my partner and I to hold each other, and say to each other that a miracle happened, and now there’s a chance that maybe Nina will live. The sane people hearing about this for the first time and sensibly saying “maybe we should not” deserve to hear, honestly, what it would take to have that happen. And when your policy ask is that large, the only way it goes through is if policymakers realize that if they conduct business as usual, and do what’s politically easy, that means their own kids are going to die too.

Shut it all down.

We are not ready. We are not on track to be significantly readier in the foreseeable future. If we go ahead on this everyone will die, including children who did not choose this and did not do anything wrong.

Shut it down.

Refer also to:

2023: Why I hate AI

This ruined my Friday. Mr. Baird is a white man, I expect he’ll never understand. He writes “everyone” while not noticing or not caring that AI left many of “everyone” out.

I expect AI will be controlled initially (until AI conquers humans) by rich white powerful misogynistic racist raping religious men (until AI renders them obsolete) and will dangerously amplify and empower humanity’s inhumanity and hideousness.

I believe AI is a threat to all life, but especially non white and non male humans.

… Fuck, these AI white men look creepy, too much alike – an invading thuggery of rapists. No way, would I trust any of them. What I find most repulsive about this “Happy Friday everyone” thread by Mr. Baird are the endless comments (I didn’t include any in this post) cheering for this misogynistic racist AI fabricated history. …

Imagine the criminals AI will let off when it controls our racist misogynistic bigoted police, courts, judges and lawyers …

Frac’ing needs to be shut down too, all of it.

The oil and gas and frac industry is already decimating its promised prosperity and jobs because of human greed automating to get rid of expensive inefficient human workers.